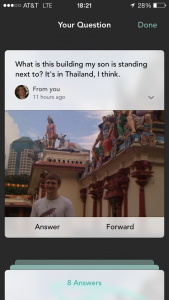

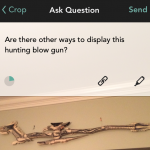

Biz Stone’s new visual Q&A platform called Jelly launched this week. The mobile app lets you use images to pose brief questions to your social network, which is defined rather expansively to include friends of friends on Facebook and Twitter. Interestingly, the site is positioned more for the helpers than for those seeking to crowdsource the help. Have five minutes in line at Starbucks or the post office? Use it to help someone in your network out.

Biz Stone’s new visual Q&A platform called Jelly launched this week. The mobile app lets you use images to pose brief questions to your social network, which is defined rather expansively to include friends of friends on Facebook and Twitter. Interestingly, the site is positioned more for the helpers than for those seeking to crowdsource the help. Have five minutes in line at Starbucks or the post office? Use it to help someone in your network out.

The site discourages the long back-and-forth threads of Reddit, and at first glance doesn’t seem to attract the thoughtful commentary of Quora. Without any means of sorting by upvoted or downvoted responses, you have to wade through a bunch of bad answers or jokes to find the right one. There’s also an element of randomness to the requests themselves — is it Chatroulette for fleeting questions? — without any kind of categorization for questions you might like to answer, like you’d find on Metafilter or QuizUp.

There are some great details in the UX, like the way a small Harvey ball fills to show you are approaching the character limit as you type a question. The sound effects are terrific, even if the stream of alerts is a little noisy. And the ease with which you can send a civilized and shareable thank you will promote social virality.

There are some great details in the UX, like the way a small Harvey ball fills to show you are approaching the character limit as you type a question. The sound effects are terrific, even if the stream of alerts is a little noisy. And the ease with which you can send a civilized and shareable thank you will promote social virality.

But what’s the end game here? Is there a differentiated and solid enough use case to make a visual Q&A platform like Jelly a standalone business? An alternative theory is that this app is a smart approach to analyzing an increasingly visual web. Gathering a large amount of data about how social networks of people respond to, understand, and share images would be a step toward solving a valuable equation. Combine that human sensibility with algorithms, and there might be a real opportunity to develop and scale insights about performance and effectiveness of images in the visual web.